A research team co-led by City University of Hong Kong (CityU) recently developed an innovative human-machine interface (HMI) that can teleoperate robots to imitate the user’s actions and perform complicated tasks. The breakthrough technology demonstrates the potential for conducting COVID-19 swab tests and nursing patients with infectious diseases.

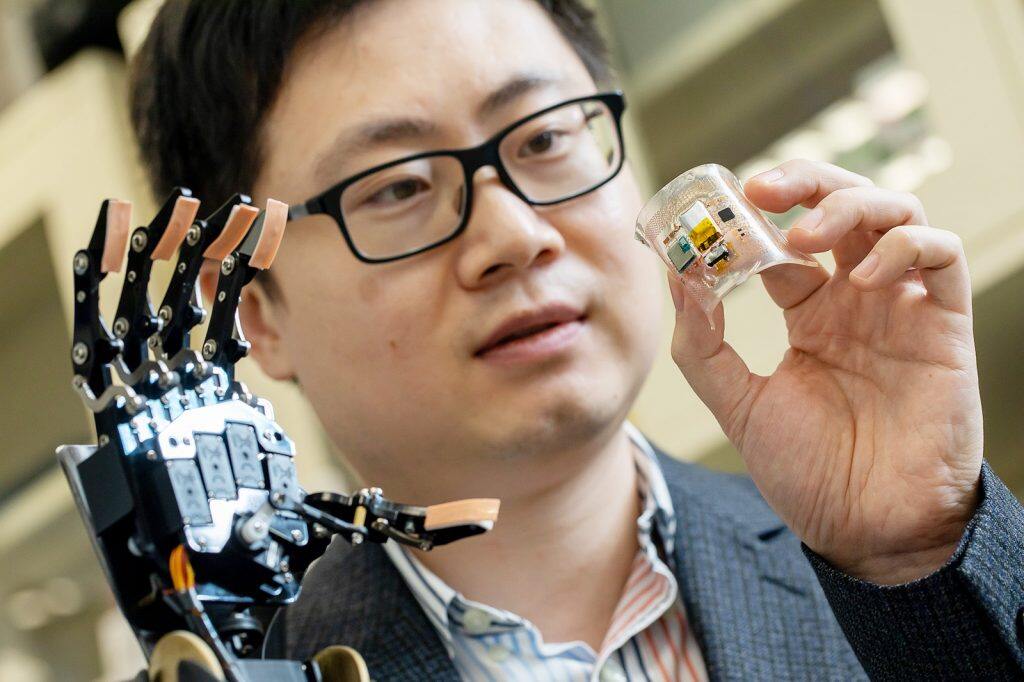

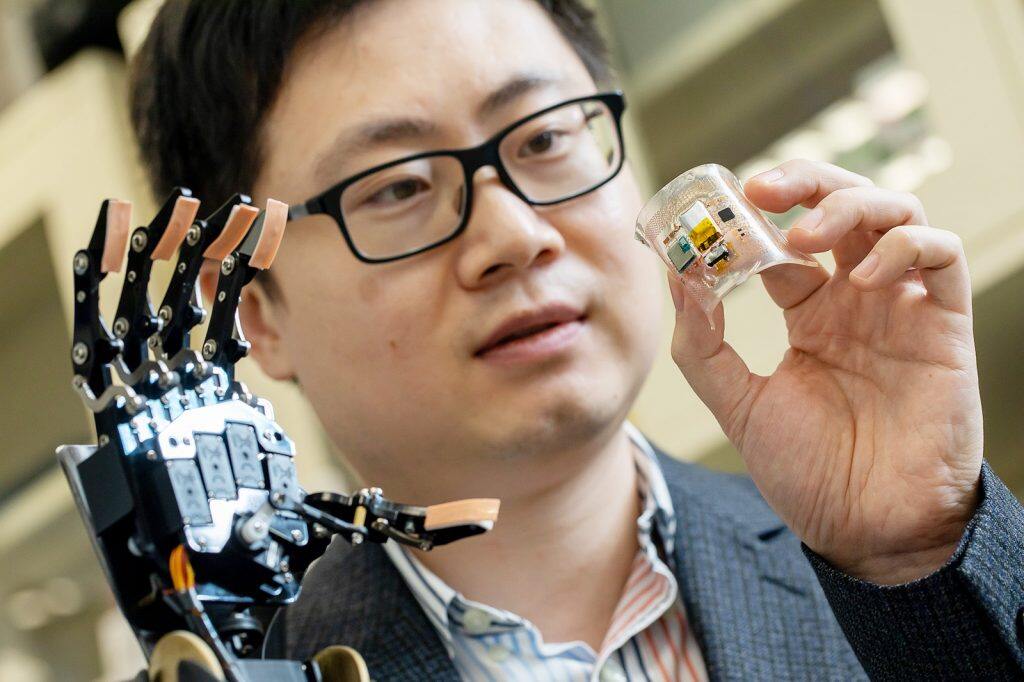

A key part of the advanced HMI system, named Robotic VR, is the flexible, multi-layered electronic skin developed by Dr Yu Xinge, Associate Professor in the Department of Biomedical Engineering (BME) at CityU, and his team. The system’s bottom layer of skin-tone elastomeric silicone serves as a soft adhesive interface that can be mounted on the skin and joints of the user.

“This new system enables teleoperating robotics to conduct complicated tasks. Doctors wearing the HMI system with VR glasses can remotely control the robots and experience the tactile sensations of the robots to accurately conduct surgery, and medical workers can remotely manipulate the robots to look after infectious patients or collecting bio-samples, thus greatly decreasing the infection risk,” says Dr Yu.

The team conducted experiments such as remotely controlling the robotic hand to collect throat swab samples for COVID-19 tests and to teleoperate humanoid robots to clean a room and even provide patient care. The team is developing a next-generation system for the robotic collection of nasal swab tests.

The layers of the electronic skin are interconnected with a collection of chip-scale integrated circuits and sensing components, including resistors, capacitors, a Bluetooth module, a microcontroller unit (MCU), and soft sensors and actuators developed by the team.

The sensors of the Robotic VR system can accurately detect and convert subtle human motion into electrical signals, which are processed by the MCU and wirelessly transmitted to the target robot. In this way, the user can teleoperate the robot to imitate their motion to accomplish tasks remotely. The pressure sensors on the robot can send feedback signals to control the vibration intensity of the haptic actuators through the Bluetooth module, thus providing haptic feedback for the user. The user can then precisely control and adjust the motion and strength of the robot, or its arm, according to the intensity of the feedback.

The sensors of the Robotic VR system can accurately detect and convert subtle human motion into electrical signals, which are processed by the MCU and wirelessly transmitted to the target robot. In this way, the user can teleoperate the robot to imitate their motion to accomplish tasks remotely. The pressure sensors on the robot can send feedback signals to control the vibration intensity of the haptic actuators through the Bluetooth module, thus providing haptic feedback for the user. The user can then precisely control and adjust the motion and strength of the robot, or its arm, according to the intensity of the feedback.

“The new system is stretchable and can be tightly mounted on human skin and even the whole human body for a long time. In addition, the interface provides both haptic and visual feedback systems, providing an immersive experience for users,” says Dr Yu.

The HMI system links users to robotics or computers and plays a significant role in teleoperating robotics. However, conventional HMIs are based on bulky, rigid and expensive machines, and the lack of adequate feedback for users limits their application for conducting complicated tasks.

With the advanced circuit design and outstanding mechanical characteristics, Dr Yu believes Robotic VR can teleoperate various machines, e.g. driverless cars, while people with disability can remotely manipulate a robot to carry heavy goods. Dr Yu also expects this new system to help provide a new approach in wirelessly connecting people to a robot or virtual character in the metaverse.

The system supports three wireless transmission methods – Bluetooth (up to tens of metres), Wi-Fi (up to about 100 metres), and the Internet (worldwide) – which can be adjusted according to the practical applications.

The research study was published in Science Advances under the title “Electronic Skin as Wireless Human Machine Interfaces for Robotic VR”. The corresponding authors are Dr Yu and Professor Xie Zhaoqian from Dalian University of Technology (DUT). The first authors are PhD students Liu Yiming, Yiu Chun-ki and post-doc fellow Dr Huang Ya from BME, and Ms Song Zhen from DUT.